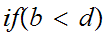

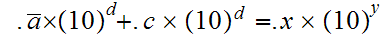

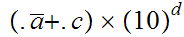

The follow steps are involved in solving the equation

Compare

and

and

shift

the decimal point of

shift

the decimal point of

places

to the left, else shift he decimal point of

places

to the left, else shift he decimal point of

places

to the left. (If

places

to the left. (If

equation

would now looks like

equation

would now looks like

)

)

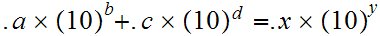

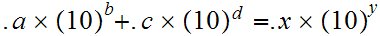

Again, assuming

,compute

,compute

to get the possibly unnormalized answer

to get the possibly unnormalized answer

Round and, if necessary, adjust exponent and significand.

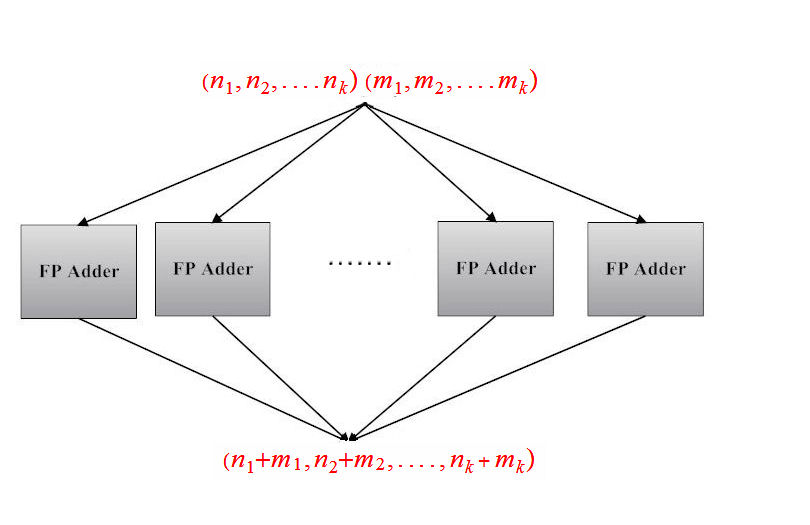

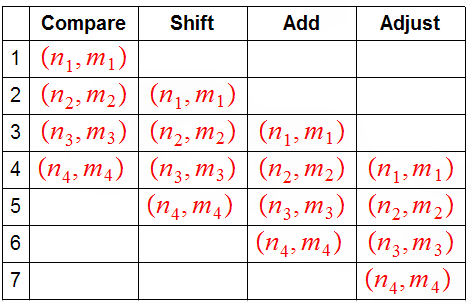

A blackbox implementation of this process would look like:

The pipeline arithmetic is as follows: Assuming each step in the

Floating-Point add takes one clock cycle, a single fp add would take four

clock cycles. However, if we could start a second add as soon as the first is

done with the "Comparer," it would take five clock cycles to do two fp adds

and

cycles to do

cycles to do

fp adds.

fp adds.

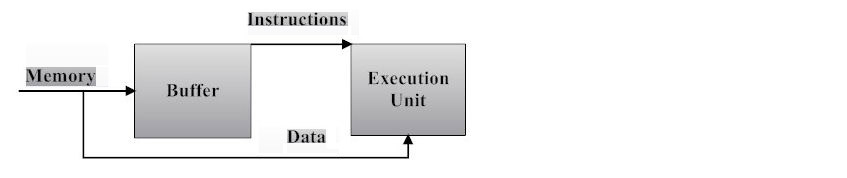

The IBM 7094 (1962) used an "Instruction Backup Register" to buffer "the next instruction." This was used to overlap the execution of one instruction with the fetch of the next. The result was about a 25% increase in performance.

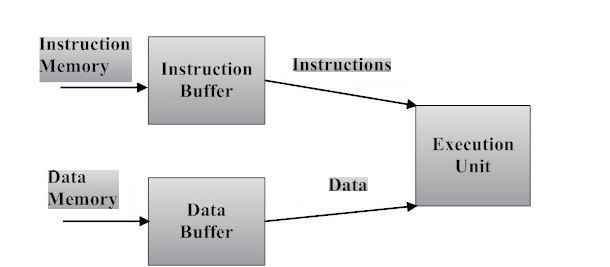

Another strategy for overlapping execution steps is to completely separate program memory for data memory.

Vector and Matrix processing provide examples of programs that perform identical procedures on different data elements. An array of processors operating on a single instruction stream provides a very important example of hardware parallelism.