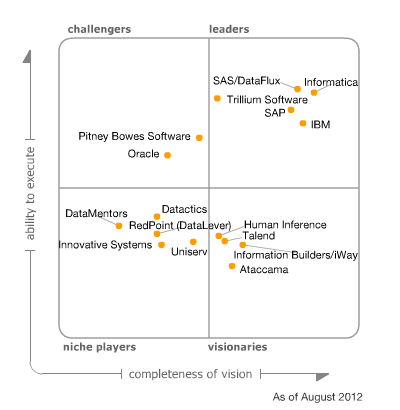

Magic Quadrant for Data Quality Tools

VIEW SUMMARY

Demand for data quality tools remains strong, driven by more deployments that support information governance programs, master data management initiatives and application modernization efforts. Vendors are expanding their functionality to support non-IT roles and diversifying their deployment models.

Market Definition/Description

Data quality assurance is a discipline focused on ensuring that data is fit for use in business processes ranging from core operations, to analytics and decision-making, to engagement and interaction with external entities.

As a discipline, it comprises much more than technology — it also includes roles and organizational structures, processes for monitoring, measuring and remediating data quality issues, and links to broader information governance activities via data-quality-specific policies.

Given the scale and complexity of the data landscape across organizations of all sizes and in all industries, tools to help automate key elements of the discipline continue to attract more interest and to grow in value. As such, the data quality tools market continues to show substantial growth, while exhibiting innovation and change.

The data quality tools market includes vendors that offer stand-alone software products to address the core functional requirements of the discipline, which are:

- Profiling: The analysis of data to capture statistics (metadata) that provide insight into the quality of data and help to identify data quality issues.

- Parsing and standardization: The decomposition of text fields into component parts and the formatting of values into consistent layouts based on industry standards, local standards (for example, postal authority standards for address data), user-defined business rules, and knowledge bases of values and patterns.

- Generalized "cleansing": The modification of data values to meet domain restrictions, integrity constraints or other business rules that define when the quality of data is sufficient for an organization.

- Matching: Identifying, linking or merging related entries within or across sets of data.

- Monitoring: Deploying controls to ensure that data continues to conform to business rules that define data quality for the organization.

- Enrichment: Enhancing the value of internally held data by appending related attributes from external sources (for example, consumer demographic attributes and geographic descriptors).

In addition, data quality tools provide a range of related functional abilities that are not unique to this market but that are required to execute many of the core functions of data quality, or for specific data quality applications:

- Connectivity/adapters: The ability to interact with a range of different data structure types.

- Subject-area-specific support: Standardization capabilities for specific data subject areas.

- International support: The ability to offer relevant data quality operations on a global basis (such as handling data in multiple languages and writing systems).

- Metadata management: The ability to capture, reconcile and interoperate metadata related to the data quality process.

- Configuration environment: Capabilities for creating, managing and deploying data quality rules.

- Operations and administration: Facilities for supporting, managing and controlling data quality processes.

- Workflow/data quality process support: Processes and user interfaces for various data quality roles, such as data stewards.

- Service enablement: Service-oriented characteristics and support for service-oriented architecture (SOA) deployments.

The tools provided by vendors in this market are generally consumed by technology users for internal deployment in their IT infrastructure. However, off-premises solutions in the form of hosted data quality offerings, software-as-a-service (SaaS) delivery models and cloud services continue to evolve and grow in popularity.

Magic Quadrant

Source: Gartner (August 2012)

Vendor Strengths and Cautions

Ataccama

Headquarters: Stamford, Connecticut, U.S. and Prague, Czech Republic

Products: DQ Analyzer, Data Quality Center, DQ Issue Tracker, DQ Dashboard

Customer base: 150 (estimated)

Strengths

- Breadth of functionality and degree of integration: Ataccama's data quality tools address the major functional requirements in the market, including the basics of parsing, standardization, cleansing and matching, as well as data profiling. They are applicable to a range of data domains, and are well-integrated to provide customers a seamless experience when working through end-to-end data quality processes. In addition, the vendor offers master data management (MDM) capabilities via products based on its data quality technology.

- Usability and user-facing functionality: This area been identified as a weakness in the past, but Ataccama's user interface has improved during the past year. This contributes to improved usability, which is cited by reference customers as a positive characteristic of the vendor's toolset. In addition, the functionality and workflow for data quality issue tracking and resolution addresses an area of growing demand and remains a differentiator relative to many competitors.

- Partner channels: Ataccama continues to capitalize on its OEM relationship with Information Builders/iWay, and this provides its main source of revenue, other than its own direct sales in Eastern Europe. In addition, Ataccama has a joint-marketing relationship with Teradata, which is intended to increase the company's market visibility. Given Ataccama's relatively small size and limited profile outside its home region, these partnerships have been the major contributors to the company's above-average revenue growth during the past year.

- Cost model: The free data-profiling capabilities provided by Ataccama in the form of DQ Analyzer, combined with good usability characteristics, contribute to customers' positive perception of the company's pricing and overall cost of deployment. Given that many buyers remain cost-conscious, this gives Ataccama an opportunity to exploit in the face of competition from much larger and well-established vendors.

Cautions

- Conflict with a substantially stronger channel partner: Although its OEM relationship with Information Builders has been a great advantage as Ataccama attempts to grow in this market, it also introduces a competitive challenge. Information Builders is increasing its focus on this market and will compete directly against Ataccama with greater resources and capabilities. This could limit Ataccama's growth, especially in North America and Western Europe.

- Limited market presence, mind share and skill base: As one of the newer entrants to this market, Ataccama has yet to establish a significant presence, and customers outside Eastern Europe tend to cite challenges in relation to the availability of skills. This is an issue of size and adoption, rather than a reflection of any regional limitations in Ataccama's technology. The vendor addresses this challenge to some degree by providing high-quality professional services and support — something also noted by reference customers.

- Alternative delivery models: Though SaaS and cloud-based deployments of data quality capabilities still constitute only a small part of the overall market demand, interest in them is growing. To date, Ataccama has shown minimal activity in this area. The vendor indicates it is exploring SaaS delivery of data quality operations such as data quality assessment services, but currently has no specific offerings or planned deliverables in this direction.

- Integration capabilities: Although Ataccama offers additional functionality in the related market of MDM solutions, it does not have a direct presence in the data integration tools space. Integration capabilities of this type, as well as the ability to integrate data quality tools with packaged applications and other technologies in complex environments, are cited by some customers as a weakness. Ataccama states that some customers use Data Quality Center as an extraction, transformation and loading (ETL) tool, addressing some of their bulk/batch data flow needs.

Datactics

Headquarters: Belfast, U.K.

Products: Data Quality Platform, Data Quality Manager, Master Record Manager

Customer base: 115 (estimated)

Note: Datactics did not engage with Gartner to provide input for this analysis. The analysis is based on Gartner's most recent interactions with the vendor, feedback from existing and prospective customers, Gartner's revenue and market share estimates, and publicly available information.

Strengths

- Breadth and integration of functionality: The Datactics platform supports the core data quality functionality of profiling, matching, cleansing and monitoring. Additional functionality provides support for data quality scorecards. The vendor's Master Record Manager product supports data stewardship activities such as review and tracking of changes to master data. Because all capabilities are based on a single technology, the Data Quality Platform, integration between the various components is seamless and leads to reduced complexity of deployment.

- Domain-neutral capabilities: While many competitors exhibit strength in the customer data domain, Datactics exhibits strength in addressing quality issues in product and materials data. This is an area of high (and growing) demand, one that is less crowded with incumbent solutions and large providers, and that therefore affords Datactics a good opportunity for growth. In addition, Datactics' technology is generally applicable to a wider range of data domains — although much of its recent activity concerns product data, its installed base includes examples of customer data deployments.

- Usability and performance: Reference customers and prospective clients cite ease of use of the Datactics platform, particularly for non-technical staff, as a major reason for selecting or considering Datactics. The platform's performance is also rated highly by reference customers.

- Good use of partner channels and alternative delivery models: Datactics has shifted its sales strategy toward a partnership model, with the vast majority of its revenue now coming from the system integrator (SI) and consultancy channel. While most of Datactics' deployments are on-premises, it also offers hosted, cloud-based solutions.

Cautions

- Limited market presence and mind share: Datactics rarely appears in Gartner client inquiries, and recent studies of data quality tool users indicate that it only infrequently makes the list of vendors considered in competitive evaluations. In addition, Datactics struggles to gain mind share outside EMEA. To that end, Datactics has established a local presence in the U.S., which, it hopes, will enable it to increase its small North American customer base.

- Sole focus on partner channel limits customers' awareness of Datactics: With many end-user organizations evaluating and selecting data quality tools directly, Datactics' decision to engage only through indirect channels means it is likely to miss opportunities to compete. Diversification of its go-to-market approach, although increasing costs, could prove a better strategy. Further marketing activities to increase its mind share could also prove useful.

- Strategy in light of the trend for convergence with the data integration tools market: Datactics' focus exclusively on delivering data quality technology contrasts with that of most competitors, since they are also active in the related market of data integration tools. As demand is increasingly for combinations of these capabilities, this could make Datactics a less valuable partnership choice for SIs and a less attractive option for buyers.

DataMentors

Headquarters: Wesley Chapel, Florida, U.S.

Products: DataFuse, ValiData, NetEffect

Customer base: 100 (estimated)

Strengths

- Coverage of core data quality capabilities: The DataMentors product set includes functionality addressing the main data quality operations of profiling, parsing, standardization, cleansing and enrichment. Reference customers identify matching as a key strength, noting particularly the ability to customize the matching approach to deliver a high degree of accuracy. During 2011, DataMentors made enhancements focused on enrichment, including improvements in geocoding, consumer and business contact and demographic data, and drive-time data for routing applications.

- Focus on customer/party: Although this vendor's technology is applied by some customers to other domains, customer data is by far the most active area of usage, and it is this area where DataMentors has substantial strength, specifically in matching, linking, standardizing and cleansing customer records, customer contact details and other customer-related attributes. DataMentors exploits this strength predominantly by positioning its offerings to address applications in the areas of CRM, marketing, campaign management and customer service.

- Support for alternative delivery models: Among vendors in the data quality tools market, DataMentors exhibits the highest percentage of customers using hosted and SaaS deployment approaches. In a recent survey of reference customers, approximately 77% were found to be using these approaches. DataMentors' experience in this area will serve it well as SaaS and cloud-based provision of data quality services continues to grow.

- Support and service: Compared with its main competitors, including market leaders, DataMentors is rated more favorably by customers with regard to the quality and timeliness of product support and the quality of processional services. DataMentors continues to improve in this area, having reorganized its product support group and enhanced its support processes to focus on even greater timeliness and quality.

- Recent revenue growth: According to Gartner's revenue estimates, DataMentors' revenue grew much faster than the market average in 2011. Although, given DataMentors' small size, this translated into minimal market share growth, it shows that this vendor has the ability to remain in the market.

Cautions

- Limited market presence and mind share: DataMentors continues to struggle to gain recognition in this market, as evidenced by its limited appearances in Gartner client inquiries and in studies analyzing commonly considered vendors in competitive evaluations. For the past 12 months, the vendor's customer base has been virtually flat. Although DataMentors can at present achieve revenue growth from its existing customers, to continue to grow it must expand into new accounts.

- Imbalance in data domain support and use cases: DataMentors has chosen to focus primarily on customer data quality issues and satisfying business users' demand for rapid deployment of solutions. Although these areas represent opportunities at present, the narrower focus that it entails, relative to DataMentors' larger competitors, could place this vendor at a competitive disadvantage. DataMentors' reference customers included several that used its technology in product data quality and financial data quality applications, but their number was small compared with those of competitors.

- Technically oriented product road map: DataMentors' product road map includes many valuable enhancements, but they tend to be very technical in nature. As such, its product road map lacks focus on key trends that are extending the scope of the data quality tools market — specifically, functionality focused on data stewards and other business roles to enable their active participation in information governance processes. DataMentors needs to sharpen its focus here, and particularly improve the profiling, visualization and workflow capabilities required to support those roles.

- Platform support and strategy in light of market convergence trends: DataMentors' product set remains limited to Windows-based deployments, though Version 6.0, scheduled to launch at the end of 2012, brings a number of technical and infrastructural enhancements, and the vendor states it will include support for Unix and Linux in that release. In addition, DataMentors is one of the few competitors without a presence in the related markets of data integration tools and MDM solutions. With these markets rapidly on the path to convergence, this creates a risk when competing with vendors of similar or larger size that have broader data management offerings.

Human Inference

Headquarters: Arnhem, Netherlands

Products: HIquality Suite, HIquality Name Worldwide, HIquality Identify, HIquality Data Improver, DataCleaner

Customer base: 280 (estimated)

Strengths

- Breadth of functionality: Human Inference's product set covers all the functional elements needed to address contemporary data quality demand, including data profiling, general parsing and standardization, matching, merging and enrichment.

- Deep experience in EMEA customer/party data issues: The vendor's greatest competency is in cleansing customer/party data, specifically names, addresses and other identifying attributes. Reference customers identify Human Inference's deep knowledge of the linguistic and cultural nuances of European data as a key reason for selecting the HIquality Suite. Building on this customer data competency, Human Inference has expanded into the related market for customer MDM solutions. Although this expansion effort is still at an early stage, it aligns with an important convergence trend that sees data quality tools and MDM solutions increasingly tied together.

- Alternative delivery models: Of the vendors in the data quality tools market, Human Inference exhibits one of the most significant focuses on SaaS and cloud computing. Combined with its traditional on-premises deployments (which account for the majority of its work to date), this represents healthy diversification and a strong vision for how customers will increasingly want to consume data quality capabilities. The vendor's strategy is to enable on-premises deployments and broad distribution in the cloud via the same technology platform.

- Diverse licensing models and related partnerships: Human inference also shows good diversity in its licensing and pricing models. The open-source DataCleaner solution, acquired by Human Inference in 2011, offers customers another lower-cost entry point into the vendor's capabilities and is a good response to other EMEA-based competitors that also offer open-source or very-low-cost solutions. In addition, DataCleaner has enabled Human Inference to establish a deep partnership with Pentaho, a popular open-source business intelligence (BI) platform vendor, through which DataCleaner functionality is made available to Pentaho Data Integration customers.

Cautions

- Growth below the market average: Although Human Inference returned to profitability and achieved substantially stronger revenue growth in 2011 than in 2010, its revenue growth remained below the market average. Customers (including reference clients provided by Human Inference in support of this analysis) ask questions about the vendor's market presence and ability to grow and compete, given its relatively small size. However, at the same time, Human Inference's customers have typically been using its tools for more than three years, and they consider Human Inference to be their enterprise standard for data quality technology. This indicates strong retention within the company's customer base.

- Support for non-customer/party domains: Human Inference's strategy centers on strong support for customer data, but its limited experience and capabilities in other data domains is in conflict with demand trends. Buyers increasingly desire functionality to address other key master data domains, most notably product and materials data. Human Inference's reference customers indicate a more severe imbalance across data domains than is the case for any of its main competitors (in a recent survey of reference customers less than 15% of Human Inference's showed any activity outside customer data), and customers applying the vendor's tools to product data, for example, cite this as an area of relative functional weakness.

- Installation and initial configuration: As noted in previous analyses, Human Inference's reference customers desire greater ease of implementation and less complexity in deployment. The latest versions (6.x) of the company's technology include enhancements in these areas, but it appears that many customers are still running older versions. In addition, the open-source DataCleaner offers good ease of use and rapid deployment for more targeted data quality improvement activities.

- Limited presence beyond EMEA: Human Inference's stated objective is to become the best European data quality tools vendor. Its customer base reflects its strong focus on EMEA, with other regions accounting for only a very minor percentage of installations and revenue. Given the global nature of this market, the economic challenges facing Europe, and the increasing availability of data quality technology from much larger North American vendors also focusing on EMEA, Human Inference's lack of a broader geographic presence could be a barrier to growth.

IBM

Headquarters: Armonk, New York, U.S.

Products: InfoSphere Information Analyzer, InfoSphere QualityStage, InfoSphere Discovery

Customer base: 2,000 (estimated)

Strengths

- Breadth of functionality: IBM's Information Analyzer (for discovery, profiling and analysis) and QualityStage (for parsing, standardization and sophisticated probabilistic matching), augmented by IBM's related products for entity resolution, cover the major functional capabilities in demand in this market.

- Installed base and diversity of usage: IBM's tools continue to be adopted as enterprisewide data quality technology standards, and many IBM customers are applying the tools to multiple and diverse project types. The tools are applied across a range of data domains, for a variety of use cases (from BI to data migration to MDM), and by teams of varying size. Increasingly, the profiling and discovery functionality of IBM's product set is used by IBM customers to support the work of information governance teams and programs.

- Synergy with related InfoSphere products: IBM positions its data quality capabilities for stand-alone deployment, as well as in support of and in a synergistic relationship with, other InfoSphere capabilities, such as its data integration tooling (primarily DataStage) and MDM offerings. The combination of broad data management functionality and integration between data quality tools and other components of the portfolio, achieved via common and shared metadata, is often cited by reference customers as a key point of value.

- Vendor mind share and market presence: IBM's significant mind share (as measured by frequency of appearance in Gartner client inquiries and competitive evaluations by data quality tool users), market presence and scale in data management markets and beyond contribute to its strong ability to execute.

- Product road map and recent product releases: Recent releases of QualityStage (and other InfoSphere components) have focused heavily on improving usability. Recently, v8.7 of the product simplifies installation and makes general ease-of-use enhancements. IBM claims that several hundred customers have already moved to this version or are in process of doing so. IBM's product road map is largely oriented toward fueling customers' information governance efforts by adding more functionality focused on business roles such as data steward and data governance teams, to facilitate policy and rules development, improve glossary and metadata usage, and generally drive higher levels of engagement outside the IT department. The road map also includes several "big data"-related enhancements, such as improved connectivity to Hadoop and the ability to discover relationships across unstructured data sources.

Cautions

- Limited proof points for latest versions: Only about 12% of the reference customers identified by IBM in support of this analysis indicated that they actively use the latest version of the tools (v8.7). However, customers state that they perceive the latest version as promising in terms of improving the usability of IBM's tools. Also, IBM indicates that 65 of its 100 largest customers have moved, or plan to move, to v8.7, and that the v8.7 adoption rate has accelerated far beyond what is reflected in the reference sample.

- General usability challenges: IBM's data quality tool customers continue to identify longer learning curves, greater complexity and longer time to value as challenges. Data regarding data quality tool deployments continues to show IBM implementations taking longer than the market average. Although this is likely to be partly due to the more complex problems addressed by some of IBM's customers, IBM customers of all types commonly express a desire for improved usability. IBM continues to deliver enhancements aimed at improving ease of use for the roles of developer, administrator and data steward, such as prepackaged data validation rules, an operations console, and business glossary and data discovery enhancements.

- Cost model: Customers commonly identify the cost of procuring and deploying IBM's data quality products (due to the usability challenges noted above) as a challenge. Although customers perceive a decent correlation of price to value in IBM's tools, IBM's reference customers and many prospective customers indicate that prices can be prohibitive and their perception is of a high total cost of ownership (TCO). Among survey participants that had included IBM in competitive evaluations of multiple vendors' offerings, pricing model, price points and perceived TCO were the top reasons for disqualifying IBM from further consideration. IBM's introduction of server and workgroup editions is aimed at mitigating these concerns by offering commonly used bundles of components and entry-level prices suitable for smaller customers and implementations.

- Limited SaaS and cloud-based delivery: Though SaaS and cloud-based deployments of data quality capabilities still account for only a small part of overall demand, interest in them is growing. To date, IBM's activities and traction in this area within its data quality tools customer base have been minimal, although its data quality tools have been deployed on Amazon's public cloud infrastructure. IBM claims to be working toward SaaS delivery of data quality operations, but has not publicly announced specific deliverables or the timing of availability.

Informatica

Headquarters: Redwood City, California, U.S.

Products: Data Explorer, Data Quality, Identity Resolution, AddressDoctor

Customer base: 1,500 (estimated)

Strengths

- Breadth of functionality, applicability and usage: Informatica's data quality products address the full range of major functions in demand in this market. These include data profiling, parsing, standardization, matching, entity resolution and generalized cleansing capabilities. This vendor's tools are commonly used for a diverse range of projects and data domains — the domain-neutral nature of the core data quality components (Data Explorer and Data Quality) aligns well with the continued demand for broader data quality tool deployments.

- Market presence and brand awareness: Although Informatica is still better known for its data integration capabilities, it has been steadily increasing its presence in the data quality tools market and now enjoys a significant degree of brand awareness. Gartner client inquiries and recent studies of data quality tools customers indicate that Informatica often appears on the shortlists of organizations evaluating tools in this market. Also, the customer base for Informatica's data quality tools continues to grow, which further increases the available skill base.

- Synergies with related data management products and the product road map: Informatica's strategy is to be a broad data management infrastructure technology provider, and this means its data quality tools are part of a much larger portfolio. Increasingly tight integration with the vendor's data integration tools aligns well with trends in market demand, and Informatica has an opportunity to increase the traction of its MDM offering by enabling deeper integration with its data quality tools (something it plans to do in the forthcoming version 9.5 of its platform). In addition, Informatica's recent work on connectivity and deployment for Hadoop could help support data profiling and discovery in "big data" environments.

- Alternative delivery models: As one of the early proponents of cloud-based delivery models for data integration, Informatica has the opportunity to use the knowledge it gained from that initiative to help it deliver broader data quality capabilities in a similar fashion. Although Informatica's offerings are currently limited to cloud-based address standardization and validation, its product road map calls for broader cloud-based data quality services in releases beyond v9.5.

- Service and support: Gartner's recent interactions with Informatica's reference customers reaffirm that this vendor delivers high levels of quality and achieves high levels of customer satisfaction with regard to product support, professional services and general customer service. Some longtime Informatica customers have stated that as this vendor continues to grow and add more capabilities and product lines, its consistency, quality, and speed of response in product support occasionally fall below previous levels. However, since Informatica has historically exceeded the market's expectations in this area, it remains an area of strength.

Cautions

- Rapport with, and recognition by, non-IT leaders: With data quality being a business issue and Informatica attempting to take a position increasingly focused on data governance, this vendor's lack of visibility and recognition with key non-IT roles (both leadership and otherwise) will be a challenge. Information governance topics — data quality, MDM and related initiatives — increasingly require engagement and strong buy-in beyond the IT department. Although Informatica is executing technology enhancements to engage data stewards and related business roles in the process of managing data quality and has started to modify its messaging accordingly, it must continue to build momentum and recognition for its efforts to address strategic and business issues, rather than just technology infrastructure.

- Cost model: Informatica's existing and prospective customers often express concern about its high prices (relative to some competitors) and the perceived TCO of its data quality tools (which includes a significant learning curve and investment in skills). Customers that purchase and deploy Informatica's tools generally express reasonable satisfaction with the value they deliver, but prospective customers that disqualify Informatica during competitive evaluations do so primarily because of its pricing model and price points and their perception of the TCO.

- Competitive landscape and decline of OEM relationships: Although Informatica has continued to grow in size and market presence, much of its main competition still comes from substantially larger vendors, many of which have partnered with Informatica in the past due to the complementary nature of their technologies. Many of these partners have, over time, acquired their own data quality technology, obviating the need for any type of OEM or reseller relationship. Although these developments are not an immediate threat to Informatica's well-being, they make it more challenging for Informatica to compete directly against the "full stack" providers. In addition, some of Informatica's direct competitors that have also been OEM consumers of Informatica's AddressDoctor offerings for address standardization and validation are starting to work with alternative providers.

- Integration of product components: Informatica must continue to unify and integrate the diverse data quality tools that it has acquired. This is identified as a challenge by customers from both a packaging and a pricing point of view, as well as from a product deployment and management perspective. Informatica continues to improve in these areas, as is shown by, for example, more consistent user interfaces, workflow functionality, embedded profiling capabilities and metadata repository integration.

Information Builders/iWay

Headquarters: New York, New York, U.S.

Products: iWay Data Quality Center

Customer base: 100 (estimated)

Strengths

- Breadth of functionality and multidomain support: Information Builders' data quality functionality, delivered via the iWay Data Quality Center product, addresses all core areas of functionality expected in this market. Customer deployments reflect usage across a diverse range of data domains, including customer, product, financial location data, and more. Reference customers identify configurability and general usability as positives.

- Strong presence in BI platform market and overall vendor size and viability: The longtime and significant presence of Information Builders in the BI platform market represents a considerable strength that it can exploit. By harvesting its installed base of BI customers, Information Builders can cross-sell data quality tools into accounts that are already comfortable with its other technologies.

- Visualization and other user-facing capabilities: Aided by the BI knowhow of Information Builders, the vendor's data quality tools exhibit strong support for presentation, analysis and tracking of data-profiling results. Other user-facing functions, such as a workflow and an interface for data quality issue tracking and resolution, cater well to the need to engage data stewards and other non-IT staff in data quality improvement.

- Links to data integration and MDM: Information Builders' data quality tools are integrated with its MDM offering as part of the iWay Enterprise Information Management (EIM) Suite. In addition, given Information Builders' strength in data integration and integration capabilities in general, it is able to present combinations of related products that are well-aligned with trends in overall demand.

Cautions

- A relative unknown in the data quality tools market: Information Builders entered this market only just over three years ago and has yet to establish itself as a known and respected vendor of data quality tools. This is reflected in a relatively low volume of Gartner client inquiries. Despite the vendor's highly relevant technology, marketing and mind share have been historic weaknesses of the iWay brand in other markets, and they will also impair Information Builders' execution with its data quality tools.

- Product support and documentation: Information Builders is a new entrant to this market, and the immaturity of its data quality tools — compared with many of its competitors' offerings — manifests itself in inconsistent support for customers. Reference customers rate information Builders' product support as substantially below the market average, and they note that its documentation could be improved. In addition, customers recognized that the skill base and user community around the tools is extremely young. One way in which Information Builders is trying to mitigate these concerns is by offering more prepackaged and industry-specific functionality, which reduces the need for customized and complex implementations by customers.

- EIM Suite positioning: Information Builders' positioning of the iWay Data Quality Center in its iWay EIM Suite of tools delivers a message to customers that data quality (and EIM) is largely about technology. There is a risk that this, along with Information Builders' historical tendency to take a technical positioning with its iWay products in general, may inhibit recognition of this vendor as a strategic partner for customers undertaking data quality and other EIM-related initiatives. Information Builders is taking action to orient its position toward the strategic and business-value needs of customers through campaigns focused on data governance and information management strategy, publication of a book by its thought leader on data governance, and related activities.

Innovative Systems

Headquarters: Pittsburgh, Pennsylvania, U.S.

Products: i/Lytics Data Quality, i/Lytics Data Profiling, i/Lytics ProfilerPlus, FinScan

Customer base: 780 (estimated)

Strengths

- Functionality focused on customer data-matching and cleansing applications: Innovative's i/Lytics platform provides proven capabilities based on the company's deep experience in customer data matching and cleansing applications. i/Lytics provides strong support for both mainframe and distributed platforms, and enables data quality functionality to be exposed via service interfaces. Reference customers rate Innovative's matching and entity resolution, geocoding, parsing, standardization and cleansing, and batch processing reliability and scalability as substantial strengths.

- Track record and market longevity: Innovative has competed in this market longer than most vendors — its history spans nearly four decades — and a very high percentage of its customers (relative to the market average) have used its software in production for three years or longer. Innovative's reference customers award high scores for product support, professional services and overall satisfaction with the vendor relationship. Innovative showed above-average growth in 2011, albeit from a relatively small market share.

- Focus on compliance watch list screening: Innovative continues to expand its FinScan offerings for compliance watch list screening, an area that continues to attract strong demand and that accounts for most of Innovative's growth in this market. FinScan offerings are supported by traditional on-premises software deployment, as well as a SaaS model, which makes it easy for customers to embed screening operations directly into critical business processes.

- Performance and scalability: Reference customers rate Innovative very highly for its performance and ability to scale up to address large data volumes. Many of Innovative's customers compete in the financial services sector, where performance is crucial due to the large volumes of data processed in batch mode and the often stringent response time requirements of real-time settings.

- Product road map and expanded functionality for data governance: Innovative has expanded its strategy to include functions beyond its core strength of data cleansing. Its product road map shows an increased focus on data profiling with improved reporting capabilities and an ETL offering (via an OEM relationship) to support customers' basic data integration needs — both are scheduled for delivery in 3Q12. In addition, Innovative has begun to address the growing demand for technology to enable information governance activities with a new i/Lytics ProfilerPlus product that enables trending and visualization of data quality metrics.

Cautions

- Heavy emphasis on customer data: Although Innovative's focus on customer data is also a key strength, the vendor's relative inexperience and limited capabilities in other data domains is in conflict with trends in demand. Buyers increasingly want functionality to address other key master data domains, notably product and materials. Innovative's reference customers indicate a more severe imbalance across domains than is the case for any of this vendor's main competitors, and customers applying its tools to product data identify support for this domain as an area of functional weakness.

- Experience mainly in the financial services sector: Innovative's traditional strength from an industry perspective has been in financial services, an industry that still represents about 60% of its direct customer base. Although this remains a strength due the high demand for data quality capabilities in this industry, it also represents a challenge to Innovative's ability to execute. Since industry experience is an important buying criterion for prospective customers, Innovative must continue to diversify its experience by also targeting key industries where it has limited presence, such as healthcare and government. A recent survey of a sample of Innovative's reference customers revealed almost no diversity, but Innovative claims to have customers in over 20 industries.

- Usability and business-facing functionality: Data quality functionality that directly touches on non-IT roles represents a new area for Innovative. As yet, few customers have adopted its data profiling and governance-related products. Innovative will need to continue to expand its capabilities for data stewards, business analysts and non-IT stakeholders in order to sustain its growth.

- Limited mind share and market presence: A continual challenge for Innovative is simply to get recognized by prospective customers amid the strong and pervasive marketing and sales activities of many substantially larger competitors. Prospective purchasers of data quality tools who use Gartner's client inquiry service rarely mention Innovative. Furthermore, Innovative made an extremely small number of appearances in competitive evaluations, as measured in a recent survey of data quality tool users.

Oracle

Headquarters: Redwood Shores, California, U.S.

Products: Oracle Enterprise Data Quality, Oracle Enterprise Data Quality for Product Data

Customer base: 250 (estimated)

Strengths

- Breadth of functionality: Across its data quality products, Oracle provides data profiling and capabilities for standardization and cleansing, matching and enrichment. Thanks to technology acquired in the past two years with the purchases of Datanomic and Silver Creek Systems, Oracle Enterprise Data Quality is multidomain-capable, and has strengths in both the customer data and product data domains. In particular, Oracle's depth of functionality and experience in product data quality differentiates it from many competitors.

- Usability: Customers using Oracle Enterprise Data Quality — an offering targeted at the customer data domain and that accounts for a significant majority of Oracle's data quality customers — generally identify its ease of use, for profiling and general data cleansing, as a key point of value. However, customers using the Product Data component of the same offering report the opposite experience, and sometimes identify its complexity as a challenge.

- Ability to draw on a large customer base for applications and database management systems (DBMSs): Oracle has a great deal of potential to grow its presence, revenue and share in the data quality tools market by cross-selling to its very large application, BI/analytics and DBMS customer bases. Oracle is developing its strategy to take advantage of this opportunity, and can provide deeper integration with various parts of its product portfolio — applications, in particular — in order to maximize its revenue and market share.

- Links to data integration and MDM products: Oracle's presence in the related markets for data integration tools and MDM solutions aligns well with trends in demand. Oracle will execute a co-selling strategy in which its data quality tools are attached to its data integration tools and positioned toward IT buyers, and its MDM products are positioned toward line of business buyers (as well as IT).

Cautions

- Functional overlaps and the need to further integrate acquired products: Although we expect Oracle to work to converge its two data quality products (in terms of user interface, installation and operations), customers wanting to apply the full range of functionality across diverse domains still need to deploy them both, which increases complexity and cost. Oracle has developed a basic level of interoperability between the two engines, but until full convergence occurs its product road map is focused on technical enhancements specific to one data domain or the other. Reference customers mirror this picture, as they all run one product or the other, not both.

- Fewer referenceable customers for product data quality: Despite significant and growing demand for product data quality capabilities, Oracle's customer base seems to reflect an imbalance across the two data quality products, with far fewer referenceable implementations for Product Data than for Oracle Enterprise Data Quality for customer data. Oracle needs to focus on increasing the number of product-data-related implementations in its customer base in order to increase the related skill base and pool of references, and should be able to capitalize on the strength of its product data quality capabilities to do so.

- Pricing, product support and availability of skills: Oracle's reference customers are less satisfied with the various non-product aspects that contribute to the customer experience than is the case with those of its competitors. Specifically, they identify as weaknesses the high cost of the products relative to their perceived value, the quality of product technical support (particularly for Enterprise Data Quality for Product Data), and the availability of skills for these products inside and outside Oracle.

Pitney Bowes Software

Headquarters: Stamford, Connecticut, U.S.

Products: Spectrum Technology Platform

Customer base: 2,600 (estimated)

Strengths

- Breadth of functionality: Pitney Bowes offers the typical range of core data quality functions most relevant to current market demand, including data profiling, parsing, standardization, matching, cleansing and enrichment. These functions are delivered within the context of the company's Spectrum Technology Platform. Customers identify as key strengths this vendor's functionality for customer name and address cleansing, as well as matching.

- Focus on customer/party and location data: Historically, Pitney Bowes has focused on customer data quality issues and associated location-oriented aspects such as address management, geocoding and spatial analytics. The vendor's experience in, and proven support for, such requirements represent its most substantial strength in this market. Specifically, its location-oriented enrichment and intelligence capabilities (geospatial analytics functionality) represent a significant point of differentiation.

- Product road map and related capabilities for data integration and MDM: Pitney Bowes continues to encourage customer migration to, and new sales of, Spectrum, which also includes its data integration and MDM functionality (released to the market as part of v8.0 in 2Q12). As the convergence trend continues for these markets, Spectrum appropriately aligns Pitney Bowes with emerging market demand. This vendor's product road map includes a strong focus on social network data and network analysis capabilities — in effect, the enhancement of its existing relationship identification capabilities to include other concepts represented in the world of social graphs.

- Existing customer base and market share: Pitney Bowes' many data quality tools customers make it one of the market share leaders, with its presence heavily concentrated in North America. During 2011, Pitney Bowes achieved revenue growth substantially above the overall market rate and well above the rate Pitney Bowes achieved in 2010. It attributes this growth to a sharper focus on selling and marketing Spectrum.

Cautions

- Heavy emphasis on customer/party and location data: Although this emphasis represents a source of strength for Pitney Bowes, it also poses a challenge in light of trends in demand. Buyers increasingly seek multidomain-capable data quality tools, with strong support for product and materials data being in high demand. Although Pitney Bowes' technology is relevant to various data domains, its perceived lack of experience and lack of capabilities in this area are viewed as weaknesses by current and prospective customers. The vendor's product road map strengthens this perception, as it focuses mostly on enhancing the company's core capabilities for customer/party and location data.

- Data profiling and visualization capabilities: Pitney Bowes has introduced data profiling and visualization capabilities to its product set in several major releases, but uptake of these appears limited, and customers rate these capabilities as an area of weakness in comparison with competitors' offerings. With demand growing for technology to enable information governance initiatives, these are the types of capability that are in high demand. Pitney Bowes expects further releases in 2012 to improve adoption in these areas.

- Marketing execution and mind share: Despite its strong market share position, Pitney Bowes needs substantially to increase its marketing emphasis on, and investment in, Spectrum. Compared with the market leaders, this vendor's appearance in clients' calls to Gartner's inquiry service and in competitive evaluations in general is fairly infrequent. Given its historical focus on mailing automation from a hardware point of view, Pitney Bowes needs to focus even more sharply on this issue as it lacks strong recognition as a software brand. It hopes to improve in this area by increasing its presence at relevant industry events, cross-selling Spectrum to the broader Pitney Bowes customer base, and integrating and embedding Spectrum into many of its new solutions.

RedPoint (DataLever)

Headquarters: Wellesley Hills, Massachusetts, U.S.

Products: RedPoint Data Management

Customer base: 150 (estimated)

In 4Q11, RedPoint acquired DataLever, which appeared in several previous iterations of this Magic Quadrant.

Strengths

- Solid coverage of core capabilities: RedPoint supports the core requirements of data quality, with data-profiling and general-purpose data-cleansing functionality, including parsing, standardization, matching and cleansing. Reference customers identify the strength of its out-of-the-box rules for addressing common data quality operations and the flexibility to optimize rules for their specific needs as key points of value.

- Integrated product offering: Unlike most of its key competitors, RedPoint provides its range of data qualities in a single product. This reduces complexity for customers and streamlines the process of converting profiling insights into rules for data cleansing. In addition to data quality functionality, its Data Management product also supports physical data movement via ETL functionality, which is consistent with the trend for convergence between this market and the data integration tools market.

- Ease of use: RedPoint's tools tend to have an attractive learning curve and relatively rapid times to deployment, thanks to very good usability characteristics. Reference customers very often identify usability as a key factor in their decision to select this vendor.

- Performance: RedPoint's customer base includes implementations with high-volume workloads (from many millions up to billions of records) that are processed in modest time frames. This contributes to the extremely strong performance satisfaction ratings that RedPoint receives from its reference customers.

Cautions

- Limited mind share and market presence: RedPoint is generally unknown in the data quality tools market, as evidenced by Gartner's receipt of no client inquiries about RedPoint during the past year and by RedPoint's extremely low number of appearances in competitive evaluations as measured in a recent survey of users of data quality tools. Marketing was a significant weakness for DataLever, and although its acquisition and rebranding by RedPoint improves the outlook for the technology, RedPoint must take action to address this major challenge to its ability to execute, as well as fill the gaps that DataLever exhibited in its product documentation and in skills availability.

- Customer data focus: RedPoint's customer base shows a heavy usage bias toward customer data, with recent reference customer interactions revealing only sparse activity in other domains such as product, location and financial data. Although RedPoint's technology is applicable to other data domains, its product road map mainly contains planned enhancements that are also oriented toward customer data (for example, accessing social media to enrich customer data).

- Technical positioning and road map: RedPoint prides itself on its technical and software-engineering prowess, and this is evident in how it communicates its positioning and in its product road map. Planned enhancements are mostly technical in nature — for example, version 6.2 (planned for release in 3Q12) focuses on enhanced security, Web services support, enhanced validation of email addresses and social media "handles," and richer data profiling. Although these technical advancements will add value, RedPoint also needs to focus on higher-level capabilities to directly support and engage non-technical roles (such as business analyst, data steward and business stakeholder) in information governance-related activities.

SAP

Headquarters: Walldorf, Germany

Products: Data Quality Management, Information Steward, Data Services

Customer base: 4,600 (estimated)

Strengths

- Breadth of functionality: SAP provides a good breadth of functional data quality capabilities, including data profiling and common data-cleansing operations, which can be applied in diverse environments. The core data quality functionality in Data Quality Management enables the delivery of data quality services in an SOA context, and is used in the Data Services product (which combines data integration and the Data Quality Management functionality).

- Strong presence in application and BI platform markets: SAP has a substantial presence in the enterprise application and BI platform markets, which creates significant opportunities for it to increase its data quality tools business through cross-selling. SAP's sales and marketing strategy is clearly taking advantage of these opportunities — SAP appears with increasing frequency in Gartner client inquiries and was among the vendors appearing with greatest frequency in competitive situations as measured in a recent survey of users of data quality tools.

- Depth of integration with SAP applications and data integration capabilities: SAP exploits the market presence advantages mentioned above by continuing to deliver solid integration with its own packaged applications. In addition, tight integration with ETL functionality in the form of Data Services is recognized by customers as a main point of value, particularly for organizations seeking to embed data quality operations directly into physical data flows.

- Support for multiple data domains: SAP's strength in this market remains in applications of customer/party data quality, specifically in matching and linking, deduplication, and name and address standardization and validation. In a recent survey, all of SAP's reference customers were found to be applying its tools to customer data. However, the same sample indicated that 77% were also applying them to product and materials data, and 54% to location data. Although customers tend to desire more robust functionality for non-customer data in SAP's tools, the satisfaction of customers working in these other domains continues to increase.

- Recent product delivery and road map: Continued support for information governance programs (via enhanced scorecards, data quality task workflow and stronger support for business terms), "big data" scenarios and general improvements in usability are main areas of focus for SAP. Version 4.1 of Data Services and Information Steward, released in 2Q12, provide enhancements in these areas. Information Steward is attracting substantial interest and represents a significant improvement over the data-profiling capabilities previously available to SAP customers.

Cautions

- Limited proof points for new functionality: The new Information Steward product is intended to address the significant weaknesses in data profiling that SAP has exhibited for several years. However, although this product has been available for over a year, reference customers that use it in production are comparatively few. Of the reference customers provided by SAP for this analysis, only about 15% were using Information Steward. However, Information Steward's functionality continues to receive positive feedback from SAP customers and prospective customers that have seen and evaluated it. Also, SAP claims a substantial volume of new sales of this product during the past two quarters.

- Product support and release schedules: Customers of SAP's data quality tools continue to routinely express frustration with the processes for obtaining product support and the quality and consistency of support services. The frequency of patches and a fast-paced release schedule (which means short end-of-life times for products) are also cited as challenges. SAP states that it is continuing to focus on improvements in this area, and on expanding the availability of support resources for customers through the addition and training of service partners.

- Alternative delivery models: SAP has delivered limited SaaS and cloud-based capabilities for data quality, providing support only for public cloud implementations of its tools. Although SaaS and cloud computing still account for a minority of activity in the overall market, SAP must begin to offer data quality capabilities via a SaaS model as demand continues to build and as data quality capabilities become an increasingly important component of data management platform-as-a-service offerings delivered via cloud computing. SAP plans to deliver cloud-based data quality capabilities in 2013 as part of its cloud integration strategy.

SAS/DataFlux

Headquarters: Cary, North Carolina, U.S.

Products: Data Management Platform

Customer base: 2,500 (estimated)

Strengths

- Breadth of functionality and degree of integration: DataFlux's capabilities address all the base functional capabilities required in this market, including profiling, matching, cleansing and monitoring. These are delivered via a unified platform, although the vendor also enables customers to purchase various capabilities individually. The "single product architecture" approach reduces complexity for customers and contributes to deployment times that are shorter than the market average.

- Ease of use and breadth of applicability: Customers routinely cite very good usability as a key reason for selecting DataFlux, and indicate that ease of use enables both business and IT resources to work readily with the tools. Notable is the ability to gain insight into the state of data quality rapidly via the profiling functionality, and then to turn that insight quickly into rules deployed in data-cleansing routines. DataFlux deployments exhibit a variety of types of initiative, including BI, data warehousing, MDM, data migrations and information governance programs.

- Customer service and support: Reference customers continue to report positive experiences with DataFlux's product support and professional services, as well as a good degree of satisfaction with their overall relationship with the vendor.

- Product road map and direction: DataFlux's product road map calls for further development of its Data Management Platform, which combines data quality functionality with data integration and MDM capabilities. Specific areas for future enhancement include the ability to push execution of data quality functionality down into "big data" environments (for example, popular database appliance technologies such as those of Teradata and Greenplum, as well as Hadoop) and to exploit data quality operations within the data federation component of DataFlux's platform. This aligns well with trends in market demand that make pervasive and broadly applied data quality capabilities foundational to an organization's information infrastructure.

- Brand awareness, references and vendor viability: The DataFlux brand has gained substantial mind share in the data quality tools market, as evidenced by its frequency of appearance in inquiries from Gartner clients. It was also among the providers most frequently considered by respondents in a recent Gartner survey of data quality tool users. The vendor's parent company, SAS, provides a solid base of financial strength and global resources. DataFlux is able to provide substantial numbers of reference customers representing diverse industries.

Cautions

- Recent organization and strategy changes: In 4Q11, the DataFlux sales force was absorbed into the SAS Sales organization, potentially diminishing the focus on DataFlux brand and technologies, as distinct from SAS's analytic offerings. SAS recently announced a reorganization that eliminates the DataFlux organization as a stand-alone entity and combines all remaining DataFlux functions into SAS. This move raises questions about the importance of the DataFlux brand and SAS's desire to focus on nonanalytic information infrastructure opportunities. SAS states that the rationale for this organizational change is to increase its scale in the market by using SAS's substantial resources and customer base to compete better against other large incumbent providers.

- Limited adoption of Data Management Platform: Although the DataFlux Data Management Platform is well aligned with trends in demand, it is still young and only a minority of customers have migrated from earlier versions of DataFlux technology (dfPower Studio and Integration Server). Approximately 25% of the organizations in a recent sample of reference customers indicated they were running recent or current versions of the Data Management Platform.

- Pricing model and price points: DataFlux customers and prospective clients increasingly identify the high prices of the server-based products and lease-oriented models common to SAS as challenges to adopting the vendor's technology for enterprisewide usage, and when needing to expand their investments to address the needs of new projects. Customers' satisfaction with DataFlux's pricing model and prices is somewhat low in comparison with most of its competitors. DataFlux will need to adapt its pricing to offer more attractive entry points for customers with more modest requirements or substantial budget constraints. To start to address this challenge, DataFlux recently established a new set of use-case-specific "bundles" that enable customers to purchase only the subset of the portfolio most relevant to their needs.

- Performance and scalability: A greater percentage of DataFlux's reference customers indicate performance in large-scale and complex scenarios as a challenge than is the case for its competitors. However, implementations of DataFlux's tools continue to grow in scale, and customers using the latest versions of the technology indicate they are generally satisfied with its performance.

- Alternative delivery models: DataFlux has so far delivered limited SaaS and cloud-based capabilities for data quality, with a focus on address validation via the DataFlux Marketplace. Although DataFlux has developed a strategy and execution plan for this topic, delivery has been postponed while work to align with the SAS cloud strategy continues. Although SaaS and cloud-based capabilities still account for only a minority of activity in the overall market, DataFlux needs to develop capabilities in these areas, as interest in them is growing, and data quality capabilities will be an important component of future data management platform-as-a-service offerings delivered via cloud computing.

Talend

Headquarters: Suresnes, France

Products: Talend Open Studio for Data Quality, Talend Enterprise Data Quality

Customer base: 250 (estimated)

Strengths

- Breadth of functionality and integration: Talend's data quality tools address the common functional requirements, including parsing, standardization, matching and data profiling. They are capable of supporting a range of data domains. The products are well-integrated and provide a less complex customer experience than that provided by some vendors, thanks to its single code base.

- Usability of core functionality: Reference customers cite ease of use in the development of data quality processes and in the user interface for data profiling as advantages of Talend's tools. The product road map includes plans to improve visualization capabilities in the form of charts and graphs.

- Cost model: The combination of the free Open Studio for Data Quality product for data profiling and modest subscription pricing for Enterprise Data Quality represent an attractive option for customers seeking lower-cost options. Combined with good ease-of-use characteristics, this contributes to an attractive cost model for customers that has been a key driver of Talend's above-average revenue and customer base growth during the past 12 months.

- Product road map and links to related capabilities: As part of its portfolio, Talend offers data integration, enterprise service bus and MDM solutions that can readily make use of the data quality functionality. Although few customers have deployed all these components broadly across an enterprise, Talend's ability to position a broad set of data management capabilities, in which data quality can be pervasively present, is well aligned with trends in demand. In addition, the vendor's product road map focuses on making data quality capabilities more suitable for non-technical users (a crucial demand trend) and on expanding support for "big data" environments (in addition to the existing ability to run matching operations in Hadoop, Talend will make additional data quality operations deployable on Hadoop).

Cautions

- Referenceable customers of enterprise scale: Despite solid percentage growth in revenue and customers, Talend still has limited recognition for its data quality tools in this market's main buying centers. Talend seems to have only limited recognition among leaders of EIM, information governance and MDM programs and initiatives. Rather, Talend's traction appears to be with the developer community, and the vendor has a limited ability to provide responsive references at high levels in customer organizations.

- Product reliability: A consistent point of feedback on Talend's technology in this market (and the related market for data integration tools) is that there is weakness in terms of product stability, particularly for new releases. Reference customers routinely report substantial issues with reliability and "bugginess," as well as challenges in keeping up with the pace of point releases and the end-of-life of prior releases. Talend is attempting to address these challenges through improvements in its testing and quality assurance processes, as well as more formalized and documented processes and timelines for release retirement.

- Support and documentation: Reference customers also frequently express frustration with quality of Talend's product support and the weakness of its product documentation. These are common issues for vendors with offerings based on open-source technology, but they will still impair Talend's ability to capture and retain enterprise-level deployments.

- Alternative delivery models: Though SaaS and cloud-based deployments of data quality capabilities still constitute only a small part of overall market demand, interest in them is growing. To date, Talend has shown minimal activity in this area. Talend's tools can be deployed in public cloud settings, but it does not provide its own cloud services.

Trillium Software

Headquarters: Billerica, Massachusetts, U.S.

Products: Trillium Software System, TS Discovery, TS Insight, Trillium Software On-Demand

Customer base: 1,050 (estimated)

Strengths

- Breadth of functionality: Trillium offers solid functionality for all the main required capabilities sought by contemporary data quality tool buyers. These include data profiling, generalized parsing, standardization, matching and cleansing functions. In addition to the traditional on-premises deployments for which it is best known, Trillium has recently sharpened its focus on cloud-based deployments with the Trillium Software On-Demand offering.

- Brand awareness, market presence and track record: Trillium has substantial mind share in this market, and a very long and solid track record of delivering data quality solutions. The growth of its market presence and mind share are aided by its substantially larger parent company, Harte-Hanks, which has recently begun to exploit more fully and to make more visible Trillium's capabilities within its marketing service offerings.

- Core data quality capabilities: Trillium's strong support for the fundamentals of data quality, including profiling, parsing, standardization and matching, is a key reason why customers continue to select this vendor and increase their investments with it. In addition, reference customers regularly identify performance in both batch and real-time scenarios as a critical point of value. Trillium continues to make progress in demonstrating the applicability of its offerings in diverse use cases and data domains. A recent survey of a sample of Trillium customers showed that, although the vast majority (84%) remained active in the customer data domain, 52% were also applying this vendor's tools to product data, 47% to location data and 36% to financial data.

- Focus on vertically oriented risk and compliance solutions: A significant part of Trillium's strategy aims to deliver more packaged solutions and services for industry-specific data-quality-related initiatives. Its first deliverables in this regard are oriented toward risk and compliance-related data governance programs in financial services organizations. Here, Trillium delivers prepackaged (but customizable) rules and dashboards, which help to monitor and expose data quality flaws and metrics to governance project teams. Trillium's product road map schedules the release of a claims data quality solution for the insurance sector in 3Q12.

- Service and support: Trillium enjoys a high degree of customer retention, in part due to its consistently strong delivery of product technical support and professional services. Trillium's reference customers generally indicate very positive experiences both in this regard and in terms of their overall relationship with Trillium.

Cautions

- General usability and complexity: Trillium's latest major release, version 13.x, brought improvements in ease of use and other functional enhancements, but reference customers (the majority of which in a recent sample had upgraded to the current major version) continue to desire better usability and reduced complexity of deployment.

- Reporting capabilities and visualization of profiling results: Trillium customers value the sophistication of profiling and cleansing capabilities provided in the vendor's tools, but they continue to express desire for stronger visualization and reporting capabilities to engage business roles as well as IT roles.

- Strategy in light of market convergence trends: Trillium has elected to pursue a strategy that keeps its product capabilities focused on the data quality tools market. With the ongoing and rapid convergence of this market with the related markets for data integration tools and MDM solutions, Trillium's positioning is increasingly at odds with buyers' preferences for broader data management capabilities. With all of its main competitors present in each of these related markets, Trillium faces a growing competitive risk that is only partially mitigated by new OEM relationships (with vendors such as Tibco Software, Software AG and QlikTech) and through its focus on vertical solutions (as noted above).

- Skills requirements and availability: Customers often indicate a desire for skilled resources to help address complexity challenges, both in initial implementation (particularly for complex projects) and in version upgrades and technical integration with other software. These customers often struggle to find locally available consultancies and implementation partners with the necessary depth of expertise to support them well in such efforts.

Uniserv

Headquarters: Pforzheim, Germany

Products: Data Quality (DQ) Explorer, DQ Batch Suite, DQ Real-Time Suite, DQ Real-Time Services, DQ Monitor

Customer base: 1,000 (estimated)

Strengths

- Functionality focused on customer data matching and cleansing applications: Uniserv focuses heavily on the core data quality capabilities for customer name and address standardization, cleansing, matching and enrichment. It has a very long (over 40-year) track record in applications of this type, and is recognized for its large customer base in Europe and as a prominent provider for such requirements.

- Expanded vision for data quality and related markets: Uniserv has expanded its vision for the data quality tools market, increasing its focus on data profiling and monitoring, delivering expanded real-time support and adding resources that will help it offer customers more strategic consulting services in addition to technology implementation knowhow. In addition, Uniserv is taking a broader view of its position relative to related markets, by offering data integration capabilities (via an OEM relationship with Talend) and working toward delivery of a "data management services hub" that tightly combines data integration and data quality functions.

- Range of platform support: Uniserv exhibits strength in terms of runtime platform support (covering a wide variety of operating environments, including mainframes) and support for integration with most popular CRM and customer-related applications.

- Alternative delivery models: Uniserv was one of the first vendors in this market to support SaaS as a delivery model, and it currently has a higher percentage of its customer base operating in this model than most other vendors. Along these lines, the vendor is moving toward a pricing model that is based on usage, as measured by volumes of data and transactions processed.

Cautions

- Recognition and capabilities beyond customer data quality: Given the increasing demand for multidomain capabilities, Uniserv's strong focus on address standardization and validation puts it at a competitive disadvantage to providers that have a reputation for addressing a broader range of data domains. In particular, experience and functions for product data are in high demand, but Uniserv's customer base reflects little or no activity in this domain.

- Functionality beyond core data cleansing: Although Uniserv's vision has expanded to acknowledge the importance of data profiling and related capabilities (visualization and monitoring), the functionality it delivers in these areas remains a relative weakness. Reference customers show limited use of these capabilities and often identify these as areas where Uniserv has a significant opportunity to enhance and improve its product set.

- Below-average growth in revenue and customer base: Despite having a large customer base, Uniserv's growth in revenue and customers was well below the market average in 2010 and 2011. Uniserv hopes to improve in these areas via new and expanded reselling and consulting partnerships, as well as by fulfilling the expanded vision noted above.

Vendors Added or Dropped

We review and adjust our inclusion criteria for Magic Quadrants and MarketScopes as markets change. As a result of these adjustments, the mix of vendors in any Magic Quadrant or MarketScope may change over time. A vendor's appearance in a Magic Quadrant or MarketScope one year and not the next does not necessarily indicate that we have changed our opinion of that vendor. It may be a reflection of a change in the market and, therefore, changed evaluation criteria, or of a change of focus by that vendor.

Added

Information Builders/iWay.

RedPoint appears this year owing to its acquisition of DataLever.

Dropped

No vendors have been removed from this iteration of the Magic Quadrant.

DataLever no longer appears on its own because it has been acquired by RedPoint.

Pitney Bowes Business Insight now appears as Pitney Bowes Software.

DataFlux now appears as SAS/DataFlux.

Inclusion and Exclusion Criteria

For vendors to be included in the Magic Quadrant, they must meet the following criteria:

- They must offer stand-alone packaged software tools (not only embedded in, or dependent on, other products and services) that are positioned, marketed and sold specifically for general-purpose data quality applications.

- They must deliver functionality that addresses, at minimum, profiling, parsing, standardization/cleansing,

matching and monitoring. Vendors that offer narrow functionality (for example, they

support only address cleansing and validation, or only deal with matching) are excluded

because they do not provide complete suites of data quality tools. Specifically, vendors

must offer all of the following:

- Profiling and visualization — they must provide packaged functionality for attribute-based analysis (for example, minimum, maximum, frequency distribution and so on) and dependency analysis (cross-table and cross-dataset analysis). Profiling results must be exposed in a either a tabular or graphical user interface delivered as part of the vendor's offering. Profiling results must be able to be stored and analyzed across time boundaries (trending).

- Parsing — they must provide packaged routines for identifying and extracting components of textual strings, such as names, mailing addresses and other contact-related information. Parsing algorithms and rules must be applicable to a wide range of data types and domains, and must be configurable and extensible by the customer.